Several years ago, one of the first blog posts that I tackled was working in the lab with N_Port ID Virtualization often referred to as NPIV for short. The blog post was titled N_Port ID Virtualization (NPIV) and VMware Virtual Infrastructure. At the time it was one of the few blog posts available on the subject because it was a relatively new feature offered by VMware. Over the years that followed, I haven’t heard much in terms of trending adoption rates by customers. Likewise, VMware hasn’t put much effort into improving NPIV support in vSphere or promoting its use. One might contemplate, which is the cause and which is the effect. I feel it’s a mutual agreement between both parties that NPIV in its current state isn’t exciting enough to deploy and the benefits fall into a very narrow band of interest (VMware: Give us in guest virtual Fibre Channel – that would be interesting).

Despite its market penetration challenges, from time to time I do receive an email from someone referring to my original NPIV blog post looking for some help in deploying or troubleshooting NPIV. The nature of the request is common and it typically falls into one of two categories:

- How can I set up NPIV with a fibre channel tape library?

- Help – I can’t get NPIV working.

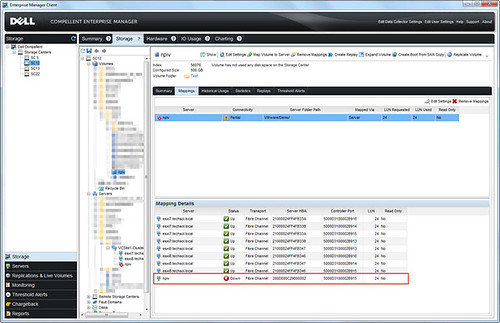

I received such a request a few weeks ago from the field asking for general assistance in setting up NPIV with Dell Compellent storage. The correct steps were followed to the best of their knowledge but the virtual WWPNs that were initialized at VM power on would not stay lit after the VM began to POST. In Dell Enterprise Manager, the path to the virtual machine’s assigned WWPN was down. Although the RDM storage presentation was functioning, it was only working through the vSphere host HBAs and not the NPIV WWPN. This effectively means that NPIV is not working:

In addition, the NPIV initialization failure is reflected in the vmkernel.log:

2014-12-15T16:32:28.694Z cpu25:33505)qlnativefc: vmhba64(41:0.0): vlan_id: 0x0

2014-12-15T16:32:28.694Z cpu25:33505)qlnativefc: vmhba64(41:0.0): vn_port_mac_address: 00:00:00:00:00:00

2014-12-15T16:32:28.793Z cpu25:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 0 to fcport 0x410a524d89a0

2014-12-15T16:32:28.793Z cpu25:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b916 (targetId = 0) ONLINE

2014-12-15T16:32:28.809Z cpu27:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 1 to fcport 0x410a524d9260

2014-12-15T16:32:28.809Z cpu27:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b90c (targetId = 1) ONLINE

2014-12-15T16:32:28.825Z cpu27:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 2 to fcport 0x410a524d93e0

2014-12-15T16:32:28.825Z cpu27:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b915 (targetId = 2) ONLINE

2014-12-15T16:32:28.841Z cpu27:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 3 to fcport 0x410a524d9560

2014-12-15T16:32:28.841Z cpu27:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b90b (targetId = 3) ONLINE

2014-12-15T16:32:30.477Z cpu22:19117991)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T16:32:32.477Z cpu22:19117991)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T16:32:34.480Z cpu22:19117991)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T16:32:36.480Z cpu22:19117991)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T16:32:38.482Z cpu22:19117991)ScsiNpiv: 1152: NPIV vport rescan complete, [5:24] (0x410943893dc0) [0x410943680ec0] status=0xbad0040

2014-12-15T16:32:38.503Z cpu22:19117991)ScsiScan: 140: Path ‘vmhba2:C0:T3:L24’: Peripheral qualifier 0x1 not supported

2014-12-15T16:32:38.503Z cpu22:19117991)WARNING: ScsiNpiv: 1141: Physical uid does not match VPORT uid, NPIV Disabled for this VM

2014-12-15T16:32:38.503Z cpu22:19117991)ScsiNpiv: 1152: NPIV vport rescan complete, [3:24] (0x410943856e80) [0x410943680ec0] status=0xbad0132

2014-12-15T16:32:38.503Z cpu22:19117991)WARNING: ScsiNpiv: 1788: Failed to Create vport for world 19117994, vmhba2, rescan failed, status=bad0001

2014-12-15T16:32:38.504Z cpu14:33509)ScsiAdapter: 2806: Unregistering adapter vmhba64

To review, the requirements for implementing NPIV with vSphere are documented by VMware and I outlined the key ones in my original blog post:

- NPIV support on the fabric switches (typically found in 4Gbps or higher fabric switches but I’ve seen firmware support in 2Gbps switches also)

- NPIV support on the vShpere host HBAs (this typically means 4Gbps or higher port speeds)

- NPIV support from the storage vendor

- NPIV support from a supported vSphere version

- vSphere Raw Device Mapping

- Correct fabric zoning configured between host HBAs, the virtual machine’s assigned WWPN(s), and the storage front end ports

- Storage presentation to the vSphere host HBAs as well as the virtual machine’s assigned NPIV WWPN(s)

If any of the above requirements are not met (plus a handful of others and we’ll get to one of them shortly), vSphere’s NPIV feature will likely not function.

In this particular case, general NPIV requirements were met. However, it was discovered a best practice had been missed in configuring the QLogic HBA BIOS (the QLogic BIOS is accessed at host reboot by pressing CTRL + Q or ALT + Q when prompted). Connection Options remained at its factory default value of 2 or Loop preferred, otherwise point to point.

Dell Compellent storage with vSphere best practices call for this value to be hard coded to 1 or Point to point only. When the HBA has multiple ports, this configuration needs to be made across all ports that are used for Dell Compellent storage connectivity. It goes without saying this also applies across all of the fabric attached hosts in the vSphere cluster.

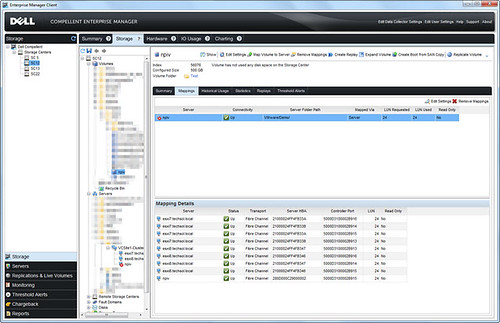

Once configured for Point to point connectivity on the fabric, the problem is resolved.

Despite the various error messages returned as vSphere probes for possible combinations between the vSphere assigned virtual WWPN and the host WWPNs, NPIV success looks something like this in the vmkernel.log (you’ll notice subtle differences showing success compared to the failure log messages above):

2014-12-15T18:43:52.270Z cpu29:33505)qlnativefc: vmhba64(41:0.0): vlan_id: 0x0

2014-12-15T18:43:52.270Z cpu29:33505)qlnativefc: vmhba64(41:0.0): vn_port_mac_address: 00:00:00:00:00:00

2014-12-15T18:43:52.436Z cpu29:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 0 to fcport 0x410a4a569960

2014-12-15T18:43:52.436Z cpu29:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b916 (targetId = 0) ONLINE

2014-12-15T18:43:52.451Z cpu29:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 1 to fcport 0x410a4a569ae0

2014-12-15T18:43:52.451Z cpu29:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b90c (targetId = 1) ONLINE

2014-12-15T18:43:52.466Z cpu29:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 2 to fcport 0x410a4a569c60

2014-12-15T18:43:52.466Z cpu29:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b915 (targetId = 2) ONLINE

2014-12-15T18:43:52.481Z cpu29:33505)qlnativefc: vmhba64(41:0.0): Assigning new target ID 3 to fcport 0x410a4a569de0

2014-12-15T18:43:52.481Z cpu29:33505)qlnativefc: vmhba64(41:0.0): fcport 5000d3100002b90b (targetId = 3) ONLINE

2014-12-15T18:43:54.017Z cpu0:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:43:56.018Z cpu0:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:43:58.020Z cpu0:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:00.022Z cpu0:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:02.024Z cpu0:36379)ScsiNpiv: 1152: NPIV vport rescan complete, [4:24] (0x4109436ce9c0) [0x410943684040] status=0xbad0040

2014-12-15T18:44:02.026Z cpu2:36379)ScsiNpiv: 1152: NPIV vport rescan complete, [2:24] (0x41094369ca40) [0x410943684040] status=0x0

2014-12-15T18:44:02.026Z cpu2:36379)ScsiNpiv: 1701: Physical Path : adapter=vmhba3, channel=0, target=5, lun=24

2014-12-15T18:44:02.026Z cpu2:36379)ScsiNpiv: 1701: Physical Path : adapter=vmhba2, channel=0, target=2, lun=24

2014-12-15T18:44:02.026Z cpu2:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:04.028Z cpu2:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:06.030Z cpu2:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:08.033Z cpu2:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:10.035Z cpu2:36379)WARNING: ScsiPsaDriver: 1272: Failed adapter create path; vport:vmhba64 with error: bad0040

2014-12-15T18:44:12.037Z cpu2:36379)ScsiNpiv: 1152: NPIV vport rescan complete, [4:24] (0x4109436ce9c0) [0x410943684040] status=0xbad0040

2014-12-15T18:44:12.037Z cpu2:36379)ScsiNpiv: 1160: NPIV vport rescan complete, [2:24] (0x41094369ca40) [0x410943684040] vport exists

2014-12-15T18:44:12.037Z cpu2:36379)ScsiNpiv: 1701: Physical Path : adapter=vmhba3, channel=0, target=2, lun=24

2014-12-15T18:44:12.037Z cpu2:36379)ScsiNpiv: 1848: Vport Create status for world:36380 num_wwpn=1, num_vports=1, paths=4, errors=3

One last item I’ll note here for posterity is that this particular case, the problem does not present itself uniformly across all storage platforms. This was an element that prolonged troubleshooting to a degree because the vSphere cluster was successful in establishing NPIV fabric connectivity to two other types of storage using the same vSphere hosts, hardware, and fabric switches. Because of this in the beginning it seemed logical to rule out any configuration issues within the vSphere hosts.

To summarize, there are many technical requirements outlined in VMware documentation to correctly configure NPIV. If you’ve followed VMware’s steps correctly but problems with NPIV remain, refer to storage, fabric, and hardware documentation and verify best practices are being met in the deployment.

Trackbacks /

Pingbacks