200 million years from now, divers off the west coast of the U.S. will make an incredible discovery. Miles beneath the Pacific Ocean, in a location once known as the Moscone Center in San Francisco, evidence will emerge which reveals spectacular gatherings that once took place. Humans from around the globe would assemble semi-annually to celebrate virtualization and cloud technologies from a company named VMware which made its mark throughout history as the undisputed and mostly uncontested leader in its space. What this company did changed the way mankind did business forever. Companies and consumers alike were provided with tremendous advantages, flexibility, and cost savings.

200 million years from now, divers off the west coast of the U.S. will make an incredible discovery. Miles beneath the Pacific Ocean, in a location once known as the Moscone Center in San Francisco, evidence will emerge which reveals spectacular gatherings that once took place. Humans from around the globe would assemble semi-annually to celebrate virtualization and cloud technologies from a company named VMware which made its mark throughout history as the undisputed and mostly uncontested leader in its space. What this company did changed the way mankind did business forever. Companies and consumers alike were provided with tremendous advantages, flexibility, and cost savings.

At these events, massive amounts of compute resources were harnessed to power “virtual laboratories”. These laboratories (or labs as they were called for short) were dynamically provisioned on demand and at large scale by the attendees themselves. Archaeologists in Miami, Florida and Ashburn, Virginia made similar discoveries and they believe that the three sites were somehow linked together for the twice a year event called “VMworld”. Scientists estimate that the combined amount of resources would easily be able to support the deployment 50,000+ “virtual machines” in just a few days.

How did they accomplish this? Without a doubt, by automating. The fossilized remains suggest they may have used one of their own development products called “Lab Manager” which was first introduced in the year 2006 A.D. and retired by vCloud Director just seven years later in 2013 according to the scriptures. The Lab Manager product was a special use case tool which many businesses with internal software development processes flourished by, and a whole lot more when it morphed into vCD. What wasn’t widely shared or known beyond the VMware staff was that it shipped with some special abilities that were locked and hidden. Scientists believe these abilities assisted in the automated deployment of virtualized ESX and ESXi hosts within Lab Manager. This was the key to automating the VMworld labs. Details aren’t 100% complete but there’s enough information such that future researchers may be able to find or synthesize the missing DNA to recreate a functional replica of what once existed.

Disclaimer: What follows is not supported by VMware. Before you get carried away with excitement, ask yourself if this is something you should be doing in your environment.

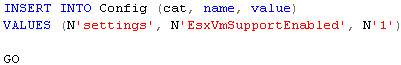

The Lab Manager 4 configuration is stored in a SQL Express database installed locally on the Lab Manager 4 server. To unlock the virtualized ESX(i) support, a hidden switch must be flipped in the database. Add a row to the “Config” table in the Lab Manager database:

Cat: settings

Name: EsxVmSupportEnabled

Value: 1

This can be accomplished this by:

- granting a domain account the SysAdmin role using the SQL Server 2005 Surface Area Configuration tool inside the Lab Manager server

- and then executing the following query via a Microsoft SQL Server Management Studio on a remote SQL 2005 server (or use OSQL locally if you know how that tool works):

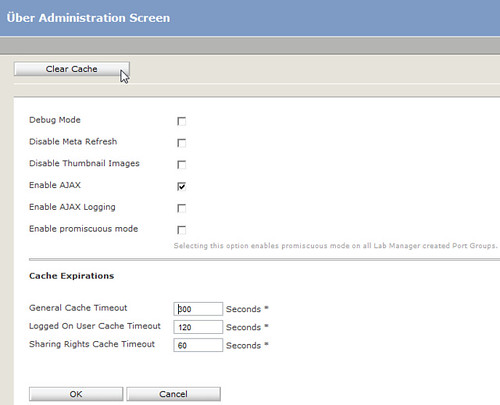

The next step is to Clear Cache via the Uber Administration Screen in the Lab Manager web interface (this screen is available with or without the above database hack). How does one get to this uber-admin page? Log into the Lab Manager web interface as an administrator, click the About hyperlink on the left edge Support menu. Once at the About page, Use CTRL+U to access the uber-admin page. Click the Clear Cache button:

Next step. By virtue of having installed and performed the initial configuration of Lab Manager at this point, it is assumed one has already prepared the Lab Manager hosts with the default Lab Manager Agent. To facilitate the automated deployment of virtual ESX(i) hosts in Lab Manager, the special ESX-VM support specific Lab Manager agents need to be installed. To do this, simply Disable your Lab manager hosts, Unprepare each Lab Manager host, then Prepare again. Because the hidden database switch was flipped in a previous step, Lab Manager will now install the ESX-VM support specific Lab Manager agent on each ESX(i) host.

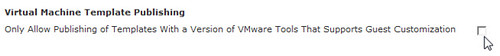

The next two steps do not exploit a hidden feature, however, they do need to be followed for virtual ESX(i) deployment. Navigate to Settings | Guest Customization. Uncheck the box labeled Only Allow Publishing of Templates With a Version of VMware Tools That Supports Guest Customization.

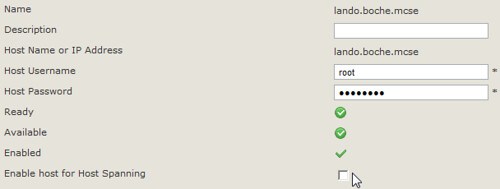

In the final step, Enterprise Plus customers making use of the vDS must disable host spanning on each Lab Manager host by unchecking the box Enable host for Host Spanning:

Now that the required changes have been made to support virtual ESX(i) hosts in Lab Manager, the resulting changes can be seen within Lab Manager.

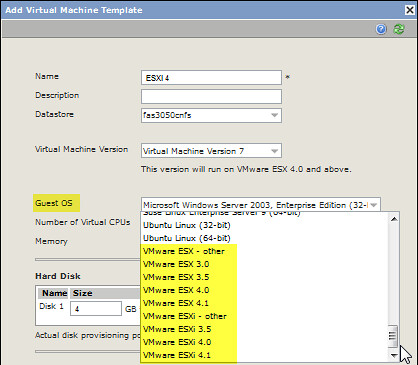

Create a new VM Template. I’ll call this one ESXi 4. Take a look at the new virtualized VMware ESX(i) Guest OS types are now available for templating and ultimately deployment:

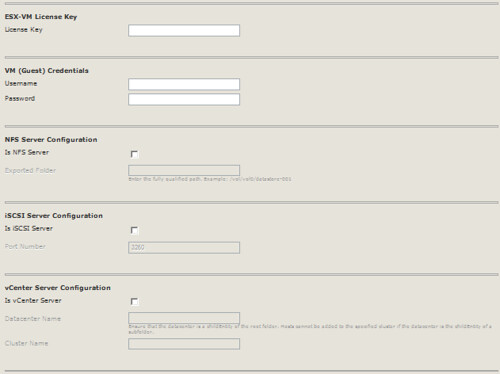

Immediately after creating the base template, select it and choose Properties. Here we see several new fields for automating the deployment of virtual ESX(i) hosts: Licensing, credentials, shared storage connectivity, and vCenter configuration:

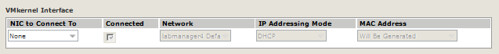

For an ESX guest OS type, an additional field for configuring a VMkernel interface is made available:

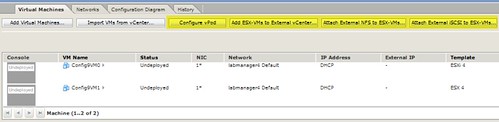

Finally, create a Configuration using one or more of the new virtual ESX(i) templates and take a look at the custom buttons that show up: Configure vPod, Add ESX-VMs to External vCenter, Attach External NFS to ESX-VMs, and Attach External iSCSI to ESX-VMs. These added functions could be used for manual provisioning post deployment, copying files, or for troubleshooting:

This is enough to get started and experiment with. Unfortunately, it’s not 100% complete. What’s missing is a guest customization script which runs inside the virtual ESX(i) host post deployment and contains more of the automation needed to deploy unique and properly configured virtual ESX(i) hosts in Lab Manager. Perhaps one day these scripts will be discovered and shared, or recreated.

Good find and good post, Jason!

Thanks for sharing this Jason, you saved me a lot of grief!

Good find — and, more importantly, nicely written and entertaining 🙂

Thanks Doug. The intro served a specific purpose which I won’t go into detail about but suffice to say it wasn’t because I was in a story telling mood. Before posting, I actually removed some intro content because frankly it just got way too fanboi cheesy, even for me. 🙂

Does anyone know of a way to get VMware Tools on a vESXi host?

That would be baller; but isn’t necessary.

Now the question is Jason, how did you get this information?

Does anyone know of a way to achieve the same results in vCD 1.5?

Jason,

Have you found a way to get the ESX(i) guest types to appear in vCloud Director ? specifically 1.5 ? I have enabled vhv but that database hack doesnt enable the other guest types

Not yet but William Lam has been spending some time trying to crack this nut. VMware pretty much has this down to a science & uses it to serve up their labs at VMworld. However, enabling ESXi deployment in vCD is just one component of the entire deployment process. Based on how I heard it described in a session at VMworld 2011 in Vegas, I’d say deploying ESXi as a guest OS with vCD accounts for about 35% of the process. There’s also a lot of automation and customization that occurs after the ESXi guest OS is deployed. Mostly custom written components and I’m not talking just PowerShell scripts. VMware pulled together SEs having explicit talents, some of those in coding in various languages. The sum of all of the ingredients is the labs we we see at VMworld. It is very impressive & I wish there was some way VMware could somehow share this work with the community (perhaps as a fling).