This was somewhat of a strange post to get off the ground. I had a definite purpose at the beginning and I knew what I was going to write about, however, through some lab scenarios I unexpectedly took the scenic route in getting to the end.

In my mind, the topic started out as “Effective/Efficient Use of Update Manager For New Builds”.

Then, while working in the lab, the title changed to “Gosh, Update Manager Is Slow”.

A while later it morphed into “Cripes, What In The Heck Is Update Manager Doing?!”

Finally I had a revelation and the topic came full circle back to an appropriate title of “VMware Update Manager, Updates, and New Builds” which is what I more or less had in mind to begin with but as I said I picked up some information which I hadn’t recognized at the beginning.

“Effective/Efficient Use of Update Manager For New Builds”

So as I said, the idea of the post started out with a predefined purpose – discussion about the use of Update Manager in host deployments. It really has more to do with host deployment methodology as a basis of discussion that it has to do with patch management. What I was going highlight was that the deployment of an ESX host goes much quicker if you start out with the most current ESX .ISO allowed in your environment and then use VMware Update Manager to install the remaining patches to bring it to current.

As an example, let’s say our current ESX platform standard is ESX 3.5.0 Update 4 with all patches up to today’s date of 6/6/09.

- The most efficient deployment method would be to perform the initial installation of ESX using the ESX 3.5.0 Update 4 .ISO and then afterwards, use VMware Update Manager to install the remaining 15 patches through today’s date. Using Ultimate Deployment Appliance version 1.4, I can deploy ESX 3.5.0 with Update 4 in five minutes. The subsequent 15 patches using VMware Update Manager takes an additional 16 minutes, end to end including the reboot. That’s a total of less than 25 minutes to deploy a host with all patches.

- Now let’s look at an alternative and much more time consuming method. Install ESX 3.5.0 using the original or even the Update 1 .ISO. Again, using UDA 1.4, this takes 5 minutes. Now we use Update Manager to remediate the ESX host to Update 4 plus the remaining 15 patches. If you used the original ESX .ISO, you’re looking at 149 updates. If you installed from the ESX 3.5.0 Update 1 .ISO, you’ve got 125 patches to install. This patching process takes nearly 90 minutes! Even on an IBM x3850M2 (one of the fastest hardware platforms available on the market today), the patch process is 75 minutes.

The numbers in the second bullet above speak to the deployment of one host. We always have more than one host in a high availability cluster and a typical environment might have 6, 12, or even 32 hosts in a cluster. Ideally we don’t want to be running hosts in a cluster on different patch levels for an extended duration. Suddenly we’re looking at a long day of work for a 6 node cluster (9.5 hours) and an entire weekend gone for a cluster of 12 hosts or more (18 hours +). The kicker is that this is still an automated deployment. Automation usually means efficiency right? Not in this case. Granted, there’s not a lot of manual labor involved here, but there is a lot of “hurry up and wait”.

Now before anyone jumps in and recommends rebuilding all of the hosts concurrently, let’s just count that out as an option because in this scenario, we’re rebuilding an active cluster that can only afford 1 host outage at a time (N+1). I’m actually being generous with the time durations because I’m not even accounting for host evacuations, which at the vCenter default of 2 at a time, can take a long time on densely populated clusters. It’s a real world scenario and if you don’t plan ahead for it, you may find out there is not enough time in a weekend to complete your upgrade.

Moral of this section: When deploying hosts, use the most recent .ISO possible which has all of the updates injected into it up to the release date of the .ISO.

“Gosh, Update Manager Is Slow”

I’ve heard some comments via word of mouth about how slow Update Manager is. Myself, I thought the comments were unfounded. I’ve never had major issues with Update Manager aside from a few nuisances I’ve learned to work around. Having managed ESX environments before the advent of Update Manager, I’m grateful for what Update Manager has brought to the table in lieu of manually populated and managed intranet update repositories. I never really noticed the Update Manager slowness because I was always deploying new host builds from the latest ESX .ISO as I described in the first bullet in the section above, and then applying the few incremental post deployment patches. Deploying the full boat of ESX patches using Update Manager has opened up my eyes as to how painfully slow it can be.

One interesting thing that I discovered in the lab was not only is the patch deployment process longer, the preceding scan process is as well. The interesting component is that both the scan and the remediate steps seem to scale in a linear fashion, whether that is actually true or just a coincidence, who knows. What I mean is that:

- An ESX 3.5.0 Update 4 host took 1 minute to scan and 16 minutes to remediate

- An ESX 3.5.0 Update 1 host took 5 minutes to scan and 84 minutes to remediate

So we’re wasting extra time in both of the remediation processes: The scan, and the remediate.

Moral of this section: Update Manager or ESX patch installation or both is slow, but it doesn’t have to be. Same as the moral of the first section: Avoid this pitfall by using the most recent .ISO possible which has all of the updates injected into it up to the release date of the .ISO.

“Cripes, What In The Heck Is Update Manager Doing?!”

So then curiosity got the best of me and I took the lab experiment a little further. Of the 84 minutes spent remediating ESX 3.5.0 Update 1 host above, how much of that time was spent installing Update 4, and how much of the time was spent installing the 15 subsequent post Update 4 patches? Afterall, I already know that remediating the 15 post Update 4 patches by themselves takes only 16 minutes. Will the numbers jive?

To find out, I deployed an ESX 3.5.0 Update 1 host and created a remediation baseline containing ONLY ESX 3.5.0 Update 4. Big sucker – 723MB, but because it’s just one giant service pack, perhaps it will install quicker than the sum of all its updates. Here’s where I was really wrong.

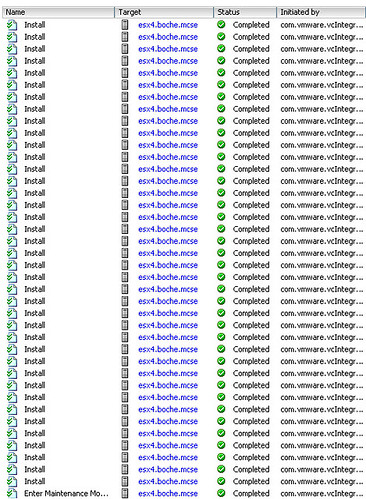

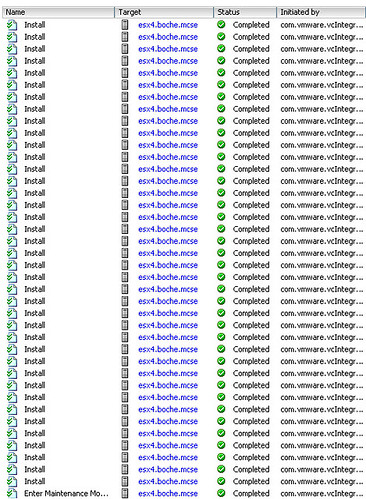

I remediated the host and expected to see 1 task in vCenter describing an installation process, and then a reboot. Instead, I saw a boatload of patches being installed:

Which brings me to the title of this section “Cripes, What In The Heck Is Update Manager Doing?!” Did I apply the wrong baseline? Did Update Manager become self aware like Skynet and decide to engineer its own creative solutions to datacenter problems? Turns out Update 4 is not a patch or a service pack at all. In and of itself, it doesn’t even include binary RPM data. It’s metadata that points to all ESX 3.5.0 patches dated up to and including 3/30/09. Sure, you can download Update 4 as a 724MB offline installation package from the VMware download section, but mosey on over to their patch repository portal and you’ll see that the giant list of superseded and included updates in Update 4 is merely an 8.0K download. At first I thought that had to be a typo and I was about to drop John Troyer an email but opening up that 8K file just for kicks was the eye opener for me. Take a look at the 8K file and you’ll see the metadata that tells Update Manager to go download many of the incremental patches leading up to 3/30/09. Same concept with the 724MB offline installation package. It’s a .ZIP file. Open it up and you won’t find a large 724MB .RPM. Instead you’ll find a directory structure containing many of the incremental updates leading up to 3/30/09.

Moral of this section: Same as the moral of the first and second sections: Avoid wasting your valuable maintenance window time by avoiding as many incremental ESX patches as possible. Use the most recent .ISO possible which has all of the updates injected into it up to the release date of the .ISO when you deploy a host.

“VMware Update Manager, Updates, and New Builds”

Connect the dots and I think we’ve got a best practice in the making for host deployments using Update Manager. Existing and new host deployments aside, look at the implications of using Update Manager to deploy a major Update (in this discussion, Update 4). It’s actually 5 times faster to rebuild the host with the integrated Update 4 .ISO than it is to patch it with Update Manager. To me that’s bizarre but it is reality if you have automated host deployment methods. For medium to large environments, automated builds are absolutely required. There’s not enough time in the weekend to patch an 18 host cluster, let alone a 32 node cluster using Update Manager. Rebuild from an updated .ISO or span your host updates over several maintenance windows. The latter could get hairy and I definitely would not recommend it.

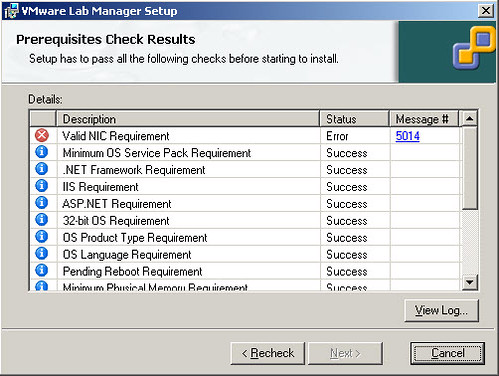

Great day today and I got a lot accomplished in the lab. Unfortunately towards the end, this happened:

Replacement unit is already on the way from NewEgg. Thank you vWire for funding the replacement!