I’ve been running into a DPM issue in the lab recently. Allow me briefly describe the environment:

- 3 vCenter Servers 4.1 in Linked Mode

- 1 cluster with 2 hosts

- ESX 4.1, 32GB RAM, ~15% CPU utilization, ~65% Memory utilization, host DPM set for disabled meaning the host should never be placed in standby by DPM.

- ESXi 4.1, 24GB RAM, ~15% CPU utilization, ~65% Memory utilization, host DPM set for automatic meaning the host is always a candidate to be placed in standby by DPM.

- Shared storage

- DRS and DPM enabled for full automation (both configured at Priority 4, almost the most aggressive setting)

Up until recently, the ESX and ESXi hosts weren’t as loaded and DPM was working reliably. Each host had 16GB RAM installed. When aggregate load was light enough, all VMs were moved to the ESX host and the ESXi host was placed in standby mode by DPM. Life was good.

There has been much activity in the lab recently. The ESX and ESXi host memory was upgraded to 32GB and 24GB respectively. Many VMs were added to the cluster and powered on for various projects. The DPM configuration remained as is. Now what I’m noticing is that with a fairly heavy memory load on both hosts in cluster, DPM moves all VMs to the ESX host and places the ESXi host in standby mode. This places a tremendous amount of memory pressure and over commit on the solitary ESX host. This extreme condition is observed by the cluster and nearly as quickly, the ESXi host is taken back out of standby mode to balance the load. Then maybe about an hour later, the process repeats itself again.

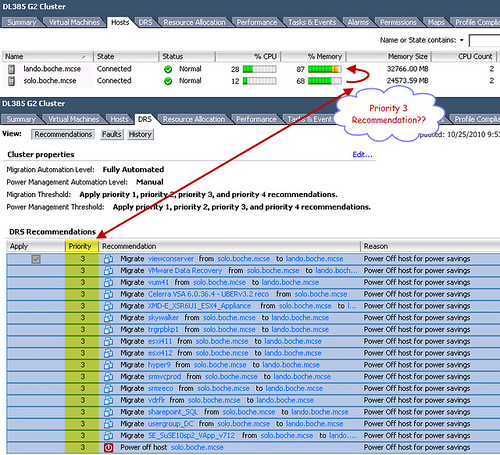

I then configured DPM to manual mode so that I could examine the recommendations being made by the calculator. The VMs were being evacuated for the purposes of DPM via a Priority 3 recommendation which is half way between Conservative and Aggressive recommendations.

What is my conclusion? I’m surprised at the perceived increase in aggressiveness of DPM. In order to avoid the extreme memory over commit, I’ll need to configure DPM slide bar for Priority 2. In addition, I’d like to get a better understanding of the calculation. I have a difficult time believing the amount of memory over commit being deemed acceptable in a neutral configuration (Priority 3) which falls half way between conservative and aggressive. In addition to that, I’m not a fan of a host continuously entering and exiting standby mode, along with the flurry of vMotion activity which results. This tells me that the calculation isn’t accounting for the amount of memory pressure which is actually occurring once a host goes into standby mode, or coincidentally there are significant shifts in the workload patterns shortly after each DPM operation.

If you are a VMware DPM product manager, please see my next post Request for UI Consistency.

I don’t understand how this is a fault, for both DRS and DPM you have set to apply priority 4 and above. This means anything that is not a priority 5 (the lowest priority) recommendation will be applied.

In my opinion the error in your configuration is to set DPM (and DRS) to slightly aggressive. For DPM this means that power saving is more important than performance, which is what you observe.

If you want to maximise power savings you may want to use VM reservations to guarantee resources even under DPM activity. Of course this makes the environment more complex to manage and may have an impact on HA admission control.

Alastair, you’re absolutely right on the priority numbers regarding what’s higher and what’s lower. Thank you for the correction. I’ve dramatically updated the post, placing focus on the DPM element. A new post comes out tomorrow morning on UI consistency leveraging much of what I had written and stripped out today.

Have had it configured and on manual in a few clusters for a while in case the customer wants to enable it at some point Have been a bit surprised at times how agressive it is, even on Level 3 settings.

I’ve never really investigated – does it perhaps tie its calculations to HA? The (rough) impression I got is that it calculated how many hosts were required to satisfy HA requirements, and recommends shutting down the rest.

I don’t really manage the HA slot sizes on those clusters, but the plan was that if I were ever challenged on it, that’s where I would start scratching…

I just read the HA & DRS technical deepdive book…very good information here relating how ESX makes calculations and such. That said, I was contemplating using DPM in our environment but the more I see posts about problems waking these hosts (blades using ILO in our case), the HA processes not starting correctly and the increase in VMotion traffic when these hot go into and come out of this state makes me question its usefulness. It seems that this DPM feature would be very useful for providers and hosting sites running hundreds or thousands of hosts…they would see a benefit. For smaller shops, I say just leave the hosts on and forget trying to squeak out some sort of optimization that this DPM may or may not provide. One option in the book was to schedule disabling and re-enabling of DPM so that you could ensure all systems are up during high usage (i.e. when systems are first accessed in the AM) then set a task to enable DPM once the business day is basically over. That might be a good option for some. I still don’t think adding this feature is useful for most though especially if things stop working or hosts cannot properly exit standby mode. Just my .02. I’m still a big fan of vmware and love working with it…well thought out.

@MikeA,

As with any design decision, the justification should be made and the impacts and risks identified. At that point one can make an evaluation on whether or not the design decision makes sense. The pitfalls you discuss about DPM are real. However, so is the need for compute elasticity as cloud popularity gains momentum.

I have Wake ON LAN option enabled on BIOS, but when I go to Configuration –> Networking Adapters there it says WOL Supoorted (NO).

NIC is supported as well, any idea what I am missing or what could be Incorrectly configured