For this blog post, I collaborated with Dawn Theirl (@KokopeIIi on Twitter) who is a Network Engineer in the San Francisco Bay Area. Dawn performs a lot of hands-on work in her day to day role as a wired and wireless network guru. We understand that CDP provides benefits for both the network and virtualization platform teams. However, in larger or siloed environments, our two teams don’t necessarily know what the other is seeing in their dashboard. Curiosity prevailed and here we are. In this writing, Dawn and I will discuss CDP, its implementation, and what exactly is seen in each of our siloed roles using our respective management tools, as well as the benefits provided by both having and sharing this information..

CDP is a useful troubleshooting tool in networking…. When given an IP of a host that someone has questions about and tracing the IP and MAC from a distribution layer switch down to the access layer, CDP info can tell you what switch to look at next. It is also useful if you don’t have an accurate network map to get an idea of how a network is physically laid out by learning what devices are physically connected to each other. CDP operates at Layer 2 (Datalink) of the OSI model. CDP packets are non-routable.

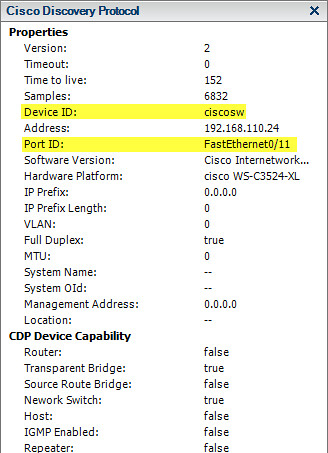

By default, CDP is enabled (and advertising) on Cisco switches and routers. CDP is enabled and effectively configured as listen on ESX(i) vSwitches. The value added by CDP benefits VMware administrators. Looking at the CDP properties of each vmnic from the vSphere Client, CDP information is provided. The most useful information is highlighted in yellow. The name of the switch which the vmnic is cabled to as well as the port number on the switch that the network cable is connected to. In access port configurations where 802.1Q VLANs are enabled, the VLAN field will also contain useful information:

From the Cisco switch point of view in the default configuration, we don’t see any information about the ESXi host or its vmnics. This is because the vSwitch tied to the vmnic uplinks is in listen mode only (no advertising). # show cdp neighbors is the command which would display information about other devices advertising information by way of CDP:

So out of the box, ESXi is configured to pull CDP information about the upstream network and this is quite valuable to have for implementation and troubleshooting. However, there is an additional configuration which can be made on the ESXi host which will allow it to provide its own intrinsic data to the Cisco switch via CDP and that is by enabling CDP advertising. This information is useful for troubleshooting which benefits both the network and virtual infrastructure teams by providing a method for close collaboration. Let’s make the additional configuration change and note the additional information which is exposed by the ESXi host.

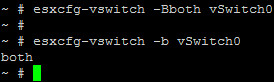

At the ESXi host DCUI, we can examine the CDP status of a vSwitch by issuing the command # esxcfg-vswitch -b vSwitch0. Shown here, vSwitch0 is in listen only mode:

Now let’s change the CDP mode for vSwitch0 to both (meaning both listen and advertise) and then verify the configuration change:

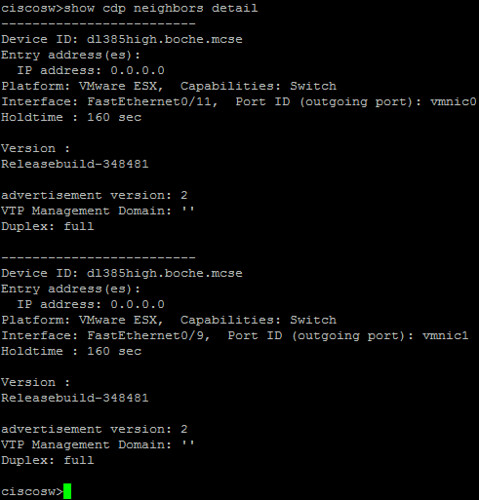

At this point, both the Cisco switch and the ESXi host are listening and advertising which is mutually beneficial to the network and virtual infrastructure teams. Nothing changes visibility wise on the ESXi side. However, the network team is now able to receive and view CDP advertisements on their Cisco gear from the ESXi hosts. Let’s take a look by issuing the > show cdp neighbors command on the Cisco switch. Note a difference from when I ran this command earlier that we can view CDP neighbor information in either user or privileged mode on the switch. With CDP advertisements enabled on the ESXi host, we’re able to see ESXi host information as well as the host vmnic uplinks and the respective ports they’re cabled to on the Cisco switch:

From the switch side I can see what ports the VMs are on. This can be useful as unless you put a description on a port with the host name every time something gets installed (and then moved), you don’t know what is connected on any given port without a lot effort to backtrack a mac address to a IP to a hostname. Lots of information… you get the host name, what port it’s connected to on the switch and which nic the host is using for that connection. Very useful for troubleshooting when a systems admin is questioning if there are problems on the network when a particular host is having issues. Usually the most the sys admin can tell you is what network the host is on and the network admin has to trace the IP and then the MAC address to find what port the host is on. With the CDP exchange once you narrow down what switch the host is on just issuing the “show CDP neighbor” command will tell you what port to focus on. One interesting note is the Host advertises itself as a switch instead of a host.

> show cdp neighbors detail provides some additional information about the host such as the build number and CDP version. This detail is not quite as valuable for troubleshooting but nonetheless could come in handy for either a large enterprise or a smaller environment with consolidated roles:

Looking at the [advertised] Cisco Discovery Protocol output from the VM, important information seen is the switch name, IP address, vlan and port the host is connected to. Other things I can see are that the port is set to full duplex, and that it’s a switch vs. a router (don’t laugh, I’ve seen a router with a blade with a small number of ports used for a very small office.)

With the implementation details and benefits out of the way, let’s focus a bit on CDP strategy. There are a few approaches to CDP which can be evaluated from labor, change management, and security primitives:

- Infrastructure implementation with default configurations – No changes required at implementation time providing the easiest and fastest deployment of ESXi in addition to providing CDP listen mode benefits from the virtual platform point of view. The virtual platform remains secure while upstream network information is advertised to neighbors.

- Disable CDP globally, enable only as needed for the short term – Requires disabling CDP at implementation time in addition to change management time spent temporarily enabling and disabling CDP later on to aid troubleshooting. Most secure from the network and virtual platform standpoint.

- Enable bidirectional CDP globally, always on – Requires enabling CDP both (listen and advertise) at implementation time thereby providing comprehensive information for troubleshooting later on. Least secure; both network and virtual platform information is exposed by CDP advertisements to neighbors.

I’ve worked with organizations who implement one, of or a combination of all three. As with many design decisions, philosophy and justifications will vary. A decision here could be made based on the size of the datacenter, distribution of roles, security approach, or the vertical which the business operates in (think regulatory compliance). CDP is of course beneficial to network and virtual platform owners but it can also aid a hacker who has penetrated the environment thereby becoming a sharing recipient of the same network information. Speaking for myself, I’ve gotten a lot of operational benefits while leveraging CDP for troubleshooting. Network engineers often ask me to configure CDP for advertising on the host side. What helps them ultimately helps me in a troubleshooting scenario and can ultimately shorten the time we spend focusing on an issue. In customer facing or production environments, every minute of downtime costs and therefore counts. My preference is to operate with CDP configured for listen on the host side. This configuration provides the most bang for the buck as it the default out-of-box configuration on both the Cisco and VMware side. In other words, if you do nothing at all, you can reap major benefits with the native configuration when it comes time to troubleshoot or provide capacity and/or SPOF planning for network resources. That’s my preference. That said, I get the security side of the discussion and of course I’m not opposed to disabling CDP when compelling requirements or constraints exist.

Aside from the design decisions above, I would be remiss if I did not also mention a potential stability issue (categorize as potential risk in your design) I came across from Cisco. When enabling CDP or leaving CDP enabled in an environment, there is a known CDP issue which should be taken into consideration because it can cause a disruption of the network. CDP Can Consume All Router Memory. When a large amount of CDP neighbor announcements are sent, it is possible to consume all memory of an available device. This causes a crash or other abnormal behavior. Refer to Cisco’s Response to the CDP Issue (Document ID: 13621) for more details. This issue is quite old and may no longer be a threat with modern versions of IOS and NX-OS.

CDP is wonderful tool. However, one obvious weakness in the heterogeneous datacenter is that it is vendor specific to Cisco switches and routers. Other networking vendors don’t support CDP and therefore cannot integrate with it. A newer and similar vendor neutral protocol called LLDP (Link Layer Discovery Protocol) appears to fill the need for the other vendors which choose support it. At this time however VMware is not supporting LLDP though at least one source claims it is on the VMware roadmap which is a good thing.

In closing, I’d like to leave the audience with an Appendix style list of VMware and Cisco CDP commands, as well as a few links to additional Cisco resources on the web. I would also like to thank Dawn for her contribution and eager willingness to collaborate with me on this article.

Update 11/17/11: Link Layer Discovery Protocol (LLDP) has been published

Appendix A: ESX(i) esxcfg-vswitch (or vicfg-vswitch) parameters:

| -B or –set-cdp | Set the CDP status for a given virtual switch. To set, pass one of “down”, “listen”, “advertise”, “both”. |

| -b or –get-cdp | Print the current CDP setting for this switch. |

Appendix B: Cisco switch commands (some require privileged mode):

| cdp run | Enables CDP globally (on by default). |

| cdp enable | Enables CDP on an interface. |

| cdp advertise-v2 | Enables CDP Version-2 advertising functionality on a device. |

| clear cdp counters | Resets the traffic counters to zero. |

| clear cdp table | Deletes the CDP table of information about neighbors. |

| debug cdp adjacency | Monitors CDP neighbor information. |

| show cdp | Displays global CDP information such as the interval between transmissions of CDP advertisements, the number of seconds the CDP advertisement is valid for a given port, and the version of the advertisement. |

| show cdp neighbors | Displays information about neighbors. |

| show cdp neighbors detail | Displays more detail about neighboring devices. |

| show cdp entry * | Displays information about all devices. |

| show cdp interface [type number] | Displays information about interfaces on which CDP is enabled. |

| show cdp traffic | Displays CDP counters, including the number of packets sent and received and checksum errors. |

| cdp timer seconds | Specifies frequency of transmission of CDP updates. |

| cdp holdtime seconds | Specifies the amount of time a receiving device should hold the information sent by your device before discarding it. |

| no cdp run | Turns off CDP globally. |

Appendix C: Helpful CDP resources from Cisco and VMware:

Configuring Cisco Discovery Protocol (CDP)