If you run Windows Server 2008 R2 or Windows 7 as a guest VM on vSphere, you may be aware that it was advised in VMware KB Article 1011709 that the SVGA driver should not be installed during VMware Tools installation. If I recall correctly, this was due to a stability issue which was seen in specific, but not all, scenarios:

If you plan to use Windows 7 or Windows 2008 R2 as a guest operating system on ESX 4.0, do not use the SVGA drivers included with VMware Tools. Use the standard SVGA driver instead.

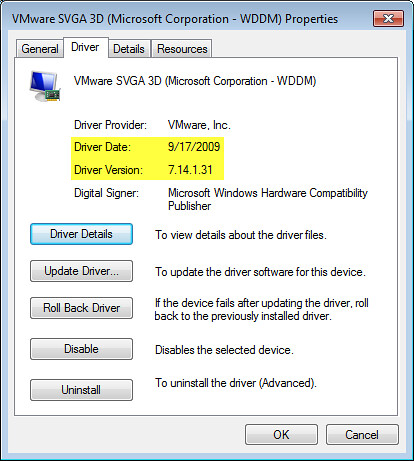

Since the SVGA driver is installed by default in a typical installation, it was necessary to perform a custom installation (or scripted perhaps) to exclude the SVGA driver for these guest OS types. Alternatively, perform a typical VMware Tools installation and remove the SVGA driver from the Device Manager afterwards. What you ended up with, of course, is a VM using the Microsoft Windows supplied SVGA driver and not the VMware Tools version shown in the first screenshot. The Microsoft Windows supplied SVGA driver worked and provided stability as well, however one side effect was that mouse movement via VMware Remote Console felt a bit sluggish.

Beginning with ESX(i) 4.0 Update 1 (released 11/19/09), VMware changed the behavior and revised the above KB article in February, letting us know that they now package a new version of the SVGA driver in VMware Tools in which the bits are populated during a typical installation but not actually enabled:

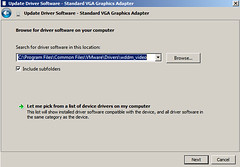

The most effective solution is to update to ESX 4.0 Update 1, which provides a new WDDM driver that is installed with VMware Tools and is fully supported. After VMware Tools upgrade you can find it in C:\Program Files\Common Files\VMware\Drivers\wddm_video.

After a typical VMware Tools installation, you’ll still see a standard SVGA driver installed. Following the KB article, head to Windows Device Manager and update the driver to the bits located in C:\Program Files\Common Files\VMware\Drivers\wddm_video:

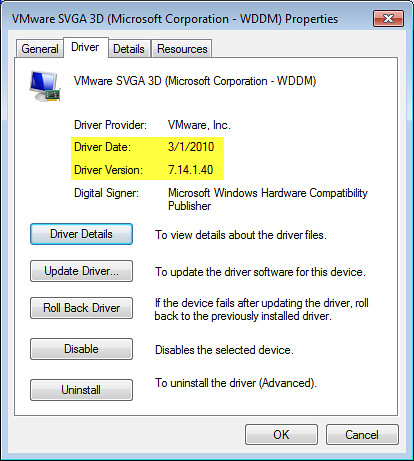

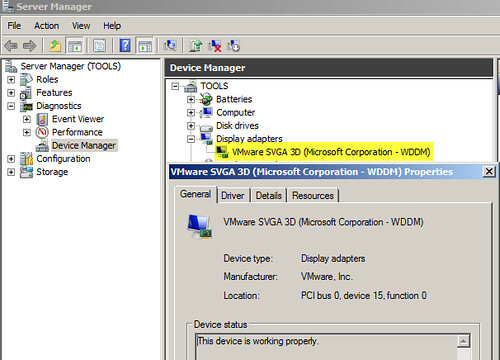

The result is the new wddm driver, which ships with the newer version of VMware Tools, is installed:

After a reboot, the crisp and precise mouse movement I’ve become accustomed to over the years with VMware has returned. The bummer here is that while the appropriate VMware SVGA drivers get installed in previous versions of Windows guest operating systems, Windows Server 2008 R2 and Windows 7 require manual installation steps, much like VMware Tools installation on Linux guest VMs. Add to this the fact that the automated installation/upgrade of VMware Tools via VMware Update Manager (VUM) does not enable the wddm driver. In short, getting the appropriate wddm driver installed for many VMs will require manual intervention or scripting. One thing you can do is to get the wddm driver installed in your Windows Server 2008 R2 and Windows 7 VM templates. This will ensure VMs deployed from the templates have the wddm driver installed and enabled.

The wddm driver install method from VMware is helpful for the short term, however, it’s not the scalable and robust long term solution. We need an automated solution from VMware to get the wddm driver installed. It needs to be integrated with VUM. I’m interested in finding out what happens with the next VMware Tools upgrade – will the wddm driver persist, or will the VMware Tools upgrade replace the wddm version with the standard version? Stay tuned.

Update 11/6/10: While working in the lab tonight, I noticed that with vSphere 4.1, the correct wddm video driver is installed as part of a standard VMware Tools installation on Windows 7 Ultimate x64 – no need to manually replace the Microsoft video driver with VMware’s wddm version as this is done automatically now.

Update 12/10/10: As a follow up to these tests, I wanted to see what happens when the wddm driver is installed under ESX(i) 4.0 Update 1 and its corresponding VMware Tools, and then the VM is moved to an ESX(i) 4.1 cluster and the VMware Tools are upgraded. Does the wddm driver remain in tact, or will the 4.1 tools upgrade somehow change the driver? During this test, I opted to use Windows 7 Ultimate 32-bit as the guest VM guinea pig. A few discoveries were made, one of which was a surprise:

1. Performing a standard installation of VMware Tools from ESXi 4.0 Update 1 on Windows 7 32-bit will automatically install the wddm driver, version 7.14.1.31 as shown below. No manual steps were required to install this driver forcing a 2nd reboot. I wasn’t counting on this. I expected the Standard VGA Graphics Adapter driver to be installed as seen previously. This is good.

After moving the VM to a 4.1 cluster and performing the VMware Tools upgrade, the wddm driver was left in tact, however, its version was upgraded to 7.14.1.40. This is also good in that the tools ugprade doesn’t negatively impact the desired results of leveraging the wddm driver for best graphics performance.

More conclusive testing should be done with Windows 7 and Windows Server 2008 R2 64-bit to see if the results are the same. I’ll save this for a future lab maybe.