I talk with a lot of customers including those confined to vSphere, storage, and general datacenter management roles. The IT footprint size varies quite a bit between discussions as does the level of experience across technologies. However, one particular topic seems to come up at regular intervals when talking vSphere and storage: Thin Provisioning – where exactly is the right place for it in the stack? At the SAN layer? At the vSphere layer? Both?

Virtualization is penetrating datacenters from multiple angles: compute, storage, network, etc. Layers of abstraction seem to be multiplying to provide efficiency, mobility, elasticity, high availability, etc. The conundrum we’re faced with is that some of these virtualization efforts converge. As with many decisions to be made, flexibility yields an array of choices. Does the convergence introduce a conflict between technologies? Do the features “stack”? Do they complement each other? Is one solution better than the other in terms of price or performance?

I have few opinions around thin provisioning (and to be clear, this discussion revolves around block storage. Virtual machine disks are natively thin provisioned and written into thin on NFS datastores).

1. Deploy and leverage with confidence. Generally speaking, thin provisioning at either the vSphere or storage layer has proven itself as both cost effective and reliable for the widest variety of workloads including most tier 1 applications. Corner cases around performance needs may present themselves and full provisioning may provide marginal performance benefit at the expense of raw capacity consumed up front in the tier(s) where the data lives. However, full provisioning is just one of many ways to extract additional performance from existing storage. Explore all available options. For everything else, thinly provision.

2. vSphere or storage vendor thin provisioning? From a generic standpoint, it doesn’t matter so much, other than choose at least one to achieve the core benefits around thin provisioning. Where to thin provision isn’t really a question of what’s right, or what’s wrong. It’s about where the integration is the best fit with respect to other storage hosts that may be in the datacenter and what’s appropriate for the organizational roles. Outside of RDMs, thin provisioning at the vSphere or storage layer yields about the same storage efficiency for vSphere environments. For vSphere environments alone, the decision can be boiled down to reporting, visiblity, ease of use, and any special integration your storage vendor might have tied to thin provisioning at the storage layer.

The table below covers three scenarios of thin provisioning most commonly brought up. It reflects reporting and storage savings component at the vSphere and SAN layers. In each of the first three use cases, a VM with 100GB of attached .vmdk storage is provisioned of which a little over 3GB is consumed by an OS and the remainder is unused “white space”.

- A) A 100GB lazy zero thick VM is deployed on a 1TB thinly provisioned LUN.

- The vSphere Client is unaware of thin provisioning at the SAN layer and reports 100GB of the datastore capacity provisioned into and consumed.

- The SAN reports 3.37GB of raw storage consumed to SAN Administrators. The other nearly 1TB of raw storage remains available on the SAN for any physical or virtual storage host on the fabric. This is key for the heterogeneous datacenter where storage efficiency needs to be spread and shared across different storage hosts beyond just the vSphere clusters.

- This is the default provisioning option for vSphere as well as some storage vendors such as Dell Compellent. Being the default, it requires the least amount of administrative overhead and deployment time as well as providing infrastructure consistency. As mentioned in the previous bullet, thin provisioning at the storage layer provides a benefit across the datacenter rather than exclusively for vSphere storage efficiency. All of these benefits really make thin provisioning at the storage layer an overwhelmingly natural choice.

- B) A 100GB thin VM is deployed on a 1TB fully provisioned LUN.

- The vSphere Client is aware of thin provisioning at the vSphere layer and reports 100GB of the datastore capacity provisioned into but only 3.08GB consumed.

- Because this volume was fully provisioned instead of thin provisioned, SAN Administrators see a consumption of 1TB consumed up front from the pool of available raw storage. Nearly 1TB of unconsumed datastore capacity remains available to the vSphere cluster only. Thin provisioning at the vSphere layer does not leave the unconsumed raw storage available to other storage hosts on the fabric.

- This is not the default provisioning option for vSphere nor is it the default volume provisioning default for shared storage. Thin provisioning at the vSphere layer yields roughly the same storage savings as thin provisioning at the SAN layer. However, only vSphere environments can expose and take advantage of the storage efficiency. Because it is the default deployment option, it requires a slightly higher level of administrative overhead and can lead to environment inconsistency. On the other hand, for SANs which do not support thin provisioning, vSphere thin provisioning is a fantastic option, and the only remaining option for block storage efficiency.

- C) A 100GB thin VM is deployed on a 1TB thinly provisioned LUN – aka thin on thin.

- Storage efficiency is reported to both vSphere and SAN Administrator dashboards.

- The vSphere Client is aware of thin provisioning at the vSphere layer and reports 100GB of the datastore capacity provisioned into but only 3.08GB consumed.

- The SAN reports 3.08GB of raw storage consumed. The other nearly 1TB of raw storage remains available on the SAN for any physical or virtual storage host on the fabric. Once again, the efficiency benefit is spread across all hosts in the datacenter.

- This is not the default provisioning option for vSphere and as a result the same inconsistencies mentioned above may result. More importantly, thin provisioning at the vSphere layer on top of thin provisioning at the SAN layer doesn’t provide a significant amount additional storage efficiency. The numbers below show slightly different but I’m going to attribute that difference to non-linear delta caused by VMFS formatting and call them a wash in the grand scheme of things. While thin on thin doesn’t adversely impact the environment, the two approaches don’t stack. Compared to just thin provisioning at the storage layer, the draw for this option is for reporting purposes only.

What I really want to call out is the raw storage consumed in the last column. Each cell outlined in red reveals the net raw storage consumed before RAID overhead – and conversely paints a picture of storage savings and efficiency allowing a customer to double dip on storage or provision capacity today at next year’s cost – two popular drivers for thin provisioning.

| Vendor Integration | ||||||||

| vSphere Administrators | SAN Administrators | |||||||

| vSphere Client | Virtualized Storage | |||||||

| Virtual Disk Storage | Datastore Capacity | Page Pool Capacity | ||||||

| 100GB VM | 1TB LUN | Provisioned | Consumed | Provisioned | Consumed | Provisioned | Consumed+ | |

| A | Lazy Thick | Thin Provision | 100GB | 100GB | 1TB | 100GB | 1TB | 3.37GB* |

| B | Thin | Full Provision | 100GB | 3.08GB | 1TB | 3.08GB | 1TB | 1TB |

| C | Thin | Thin Provision | 100GB | 3.08GB | 1TB | 3.08GB | 1TB | 3.08GB* |

| 1TB RDM | 1TB LUN | |||||||

| D | vRDM | Thin Provision | 1TB | 1TB | n/a | n/a | 1TB | 0GB |

| E | pRDM | Thin Provision | 1TB | 1TB | n/a | n/a | 1TB | 0GB |

+ Numbers exclude RAID overhead to provide accurate comparisons

* 200MB of pages consumed by the VMFS-5 file system was subtracted from the total to provide accurate comparisons

There are two additional but less mainstream considerations to think about: virtual and physical RDMs. Neither can be thinly provisioned at the vSphere layer. Storage efficiency can only come from and be reported on the SAN.

- D and E) Empty 1TB RDMs (both virtual and physical) are deployed on 1TB LUNs thinly provisioned at the storage layer.

- Historically, the vSphere Client has always been poor at providing RDM visibility. In this case, the vSphere Client is unaware of thin provisioning at the SAN layer and reports 1TB of storage provisioned (from somewhere unknown – the ultimate abstraction) and consumed.

- The SAN reports zero raw storage consumed to SAN Administrators. 2TB of raw storage remains available on the SAN for any physical or virtual storage host on the fabric.

- Again, thin provisioning from your storage vendor is the only way to write thinly into RDMs today.

So what is my summarized recommendation on thin provisioning in vSphere, at the SAN, or both? I’ll go back to what I mentioned earlier, if the SAN is shared outside of the vSphere environment, then thin provisioning should be performed at the SAN level so that all datacenter hosts on the storage fabric can leverage provisioned but yet unallocated raw storage.. If the SAN is dedicated to your vSphere environment, then there really no right or wrong answer. At that point it’s going to depend on your reporting needs, maybe the delegation of roles in your organization, and of course the type of storage features you may have that combine with thin provisioning to add additional value. If you’re a Dell Compellent Storage Center customer, let the the vendor provided defaults guide you: Lazy zero thick virtual disks on datastores backed by thinly provisioned LUNs. Thin provisioning at the storage layer is also going to save customers a bundle in unconsumed tier 1 storage costs. Instead of islands of tier 1 pinned to a vSphere cluster, the storage remains freely available in the pool for any other storage host with tier 1 performance needs. For virtual or physical RDMs, thin provisioning on the SAN is the only available option. I don’t recommend thin on thin to compound or double space savings because it simply does not work the way some expect it to. However, if there is a dashboard reporting need, go for it.

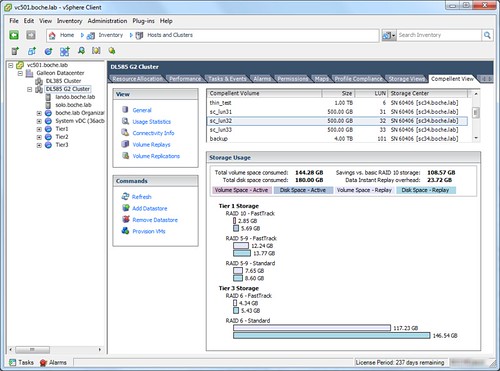

Depending on your storage vendor, you may have integration available to you that will provide management and reporting across platforms. For instance, suppose we roll with option A above: thin provisioning at the storage layer. Natively we don’t have storage efficiency visibility within the vSphere Client. However, storage vendor integration through VASA or a vSphere Client plug-in can bring storage details into the vSphere Client (and vise versa). One example is the vSphere Client plug-in from Dell Compellent shown below. Aside from the various storage and virtual machine provisioning tasks it is able to perform, it brings a SAN Administrator’s dashboard into the vSphere Client. Very handy in small to medium sized shops where roles spread across various technological boundaries.

Lastly, I thought I’d mention UNMAP – 1/2 of the 4th VAAI primitive for block storage. I wrote an article last summer called Storage: Starting Thin and Staying Thin with VAAI UNMAP. For those interested, the UNMAP primitive works only with thin provisioning at the SAN layer on certified storage platforms. It was not intended to and does not integrate with thinly provisioned vSphere virtual disks alone. Thin .vmdks in which data has been deleted from within will not dehydrate unless storage vMotioned. Raw storage pages will remain “pinned” to the datastore where the .vmdk resides until is is moved or deleted. Only then can the pages be returned back to the pool if the datastore resides on a thin provisioned LUN.

And **Really** annoyingly T10 Unmap has been disabled by VMWare in 5.0 and 5.1 and cannot be re-enabled, reclaiming space is now a very manual task.

Brett

Hi – I’m relatively new to storage but I think that a big caveat to SAN device thin provisioning is Storage DRS.

As Cormac Hogan points out (https://blogs.vmware.com/vsphere/2012/03/thin-provisioning-whats-the-scoop.html) one reason *not* to use SAN-side thin provisioning is Storage DRS. For sDRS to function, it needs to know the actual free space on the datastores. The danger arises as follows:

1. SAN device overcommit in place where total LUN sizes exceed physical storage such as 10TB total space, but 15 thin provisioned 1TB LUNs created.

2. Multiple SAN devices used.

3. VMware datastores created mapped to each LUN (from multiple storage processors) and added to Storage cluster with sDRS enabled.

The sDRS problem wouldn’t show up if only a single thin-provisioned SAN device is used. Instead, simple VM creation / increased disk storage would at some point fail. Presumably storage admin gets an alert long before the overcommit truly occurs so the danger here is pretty low.

With multiple SAN devices the situation is different and the sDRS auto-balance feature could definitely cause a problem. Consider if sDRS looks at freespace on each datastore and makes it load-balance decisions to storage vMotion vmdks. That could easily cause a mass migration to an “available” SAN storage device that uses array-level thin provisioning.

In that case – the Storage vMotion might occur too quickly for the storage admin to fix.

Thoughts are welcome!

Your are correct in that a full understanding of all technologies and their upstream/downstream impacts is needed to properly draining, deploy, and manage a virtualized datacenter. As you point out, some of vSphere’s advanced storage features don’t integrate seamlessly with modern virtualized storage arrays.

Hi,

Thanks for your article, it is very simple to understand, I have tested it to my boss 😉

I’m looking for a way to change the default “disk format provisionning”, do you know it ?

CMA.