Day 2 of 3 is in the books. We started the morning on Module 4 VMware vSphere Virtual Datacenter Design. The discussion included topics such as:

- vCenter Server requirements, sizing, placement, and high availability

- vCenter and VUM database sizing and placement

- Clusters

- Size

- HA, failover, isolation, design

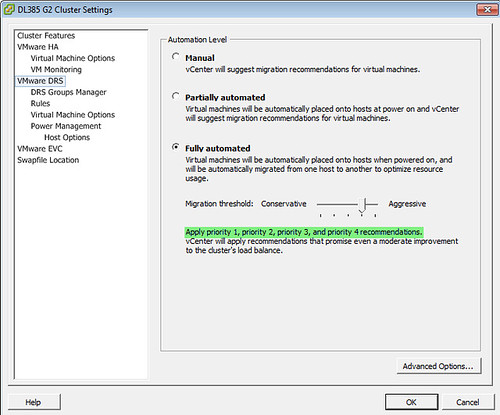

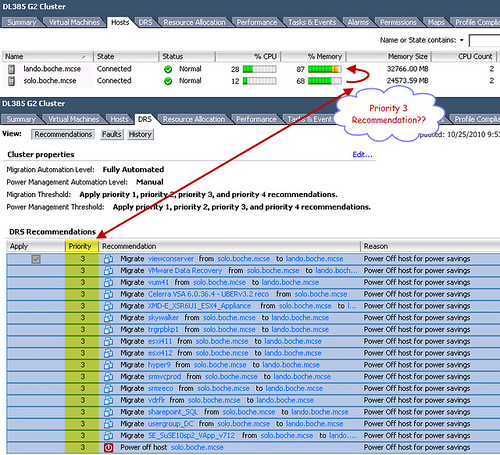

- DRS

- FT

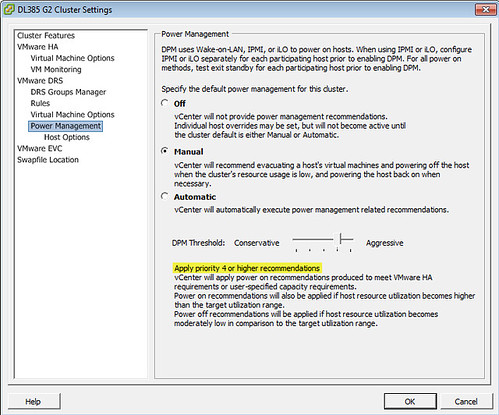

- DPM

- Resource Pools

- Shares

- Reservations

- A lot of networking, including the standard vSwitch, vNetwork Distributed Switch, and the Cisco Nexus 1000V

- FCoE

- VLANs

- PVLANs

- Load balancing policies

- Link State and Beacon Probing network failure detection (beacons are sent once per second per pNIC per VLAN; beacons are sent whether or not beacon probing is enabled – an advanced VMkernel setting permanently disables beacons)

- 1Gb/10Gb Ethernet

- Security

- Firewalls

- Port communication

- Spanning Tree/PortFast

- Jumbo frames

- IPv6

- DNS

- VM DirectPath I/O (1 VM per PCI slot = no sharing multi port adapters between VMs, no vMotion DRS HA hot-add)

We accomplished a lab or two today as well. We made some design decisions around vCenter, databases, and networking. Along with those design decisions, we provided justifications and impacts. This process is very familiar to me as I spent a lot of time providing information like this when filling out the VCDX defense application.

One thing I noticed tonight which I hadn’t seen before is that VMware posted an Adobe Flash demonstration of the new VCAP-DCD exam. Take a look. This will help candidates be better prepared overall for the exam experience. Exam time is valuable – you don’t want to waste it trying to learn the UI.

Tomorrow we start with Module 6 VMware vSphere Storage Design. I expect a lot of time spent here as the options for storage are vast. The instructor hails from EMC and I’m sure he has plenty to say about storage. On the subject of storage, the instructor passed along some tidbits on a NAS device offerings from QNAP. In particular, take a look at the TS-239 PRO II Turbo NAS. At 83.6MB/s throughput, it beats the pants off any other consumer based NAS appliance on the market (even Iomega). Cisco also rebrands this NAS model as the NSS322 so you can find it there as well. Lastly, take a look at smallnetbuilder.com. This site reviews wireless equipment as well as NAS appliances for the public. They have a nice chart rating most of the NAS appliances out there. It is here where you can see how fast the QNAP unit above screams compared to the competition.